Qualcomm’s AI Gamble: From Smartphones to Server Racks

Qualcomm is stepping out of its mobile comfort zone with a bold push into AI infrastructure. Its new inference-focused datacenter chips aim to challenge Nvidia and AMD with lower power draw and cost-efficient performance.

Today marks a significant turning point for Qualcomm (QCOM) as the San Diego-based chipmaker unveils its strategy to step into the heavy-duty world of AI infrastructure. The company announced two next-generation accelerator solutions — the AI200, set to launch in 2026, and the AI250, scheduled for 2027 — both built for “inference-first” applications rather than model training.

What does this move mean?

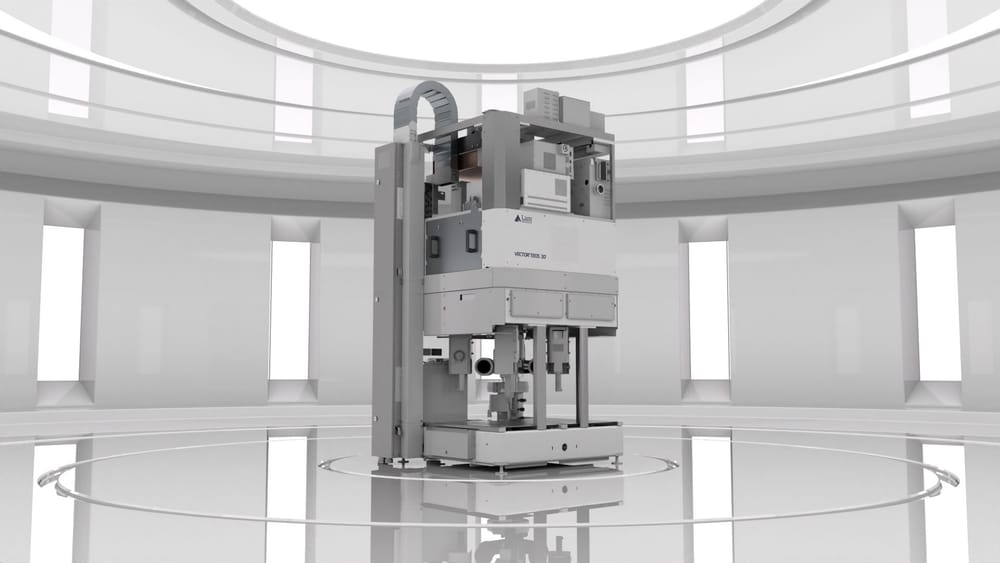

Qualcomm’s ambition is clear: it is no longer content to dominate only the smartphone and mobile-compute market with its famed Snapdragon and Hexagon NPU technology. Rather, it is entering the datacentre arena — a market long dominated by Nvidia Corporation and Advanced Micro Devices, Inc. (AMD). The company said its new chips will support processor memory architectures with “more than 10×” memory bandwidth improvement, and will ship in rack-scale systems (up to 72 chips per rack) tailored for inference workloads. That means AI-enabled services (large language models, multimodal AI) can be powered more efficiently.

Qualcomm’s heritage lies in mobile – the Hexagon neural processing unit has powered years of on-device AI in smartphones. Here the company is repackaging and scaling that know-how into a datacentre setting, promising lower power draw, tighter integration of compute + memory + networking, and a software stack that eases deployment in enterprise and cloud settings. The first disclosed customer is Saudi-based Humain, which plans to deploy 200 megawatts of these systems starting in 2026.

Application areas and ecosystem implications

Because Qualcomm is focusing on inference (not training), the target applications include powering real-time AI services: chatbots, recommendation engines, image/video understanding, autonomous vehicle stacks, edge AI, and multi-modal AI in production. The cost of ownership (TCO) and energy efficiency are central. For cloud operators, inference workloads have exploded — and so the opportunity to differentiate via lower-cost hardware is real.

Qualcomm’s choice of inference also means it is executing a different playbook than training-centric GPUs (which rely heavily on floating-point throughput and massive interconnect bandwidth). By offering rack-scale solutions optimized for lower power, Qualcomm hopes to win deployments where cost per inference, heat/power budgets and footprint matter. The tie-up with Humain underscores ambition in non-US geographies and national-scale AI buildouts — potentially a strategic advantage.

Chances of success — realistic hurdles and opportunities

While Qualcomm’s move is bold, success is far from guaranteed. The competitive landscape is arduous. Nvidia’s ecosystem is already deeply entrenched, with years of software optimisations and enterprise trust. AMD and Broadcom Inc. are also racing to build inference- and training-capable hardware. Qualcomm needs not only hardware performance but also robust software, developer tools, ecosystem integration and enterprise trust.

However, Qualcomm has meaningful strengths. Its mobile-AI heritage gives it experience in power-efficient neural processing; its new architecture claims major memory-bandwidth gains; and the enterprise need for alternative, cost-effective inference hardware is growing. Analysts report that Qualcomm’s stock jumped as much as ~19-22 % today on the announcement, signalling investor belief the timing could be right.

That said, the ramp-up timeline (2026 and 2027) means Qualcomm must maintain momentum, manage supply chain, prove real-world performance and build enterprise relationships. If any one of those falters, the momentum may stall. The shift from mobile to datacentre is non-trivial: customer expectations, support models, sales cycles differ. But if Qualcomm executes, it may carve a credible niche in inference-centric AI infrastructure — especially in regions or applications where cost and energy are constraints.

In the broader view, Qualcomm’s entry underscores the maturation of the AI-hardware market. As generative and multimodal AI services proliferate, the demand isn’t just for more training-power — it’s for efficient inference at scale, distributed across edge, cloud and hybrid architectures. For Qualcomm, today’s announcement is a strategic leap: the question now is whether it can deliver and scale, and how incumbents respond.

Author

Investment manager, forged by many market cycles. Learned a lasting lesson: real wealth comes from owning businesses with enduring competitive advantages. At Qmoat.com I share my ideas.